The Future of Product Development in Regulated Industries.

For decades, regulated industries lived in a trap of their own design: move fast and break things, or move slow and stay compliant. That trade-off is collapsing.

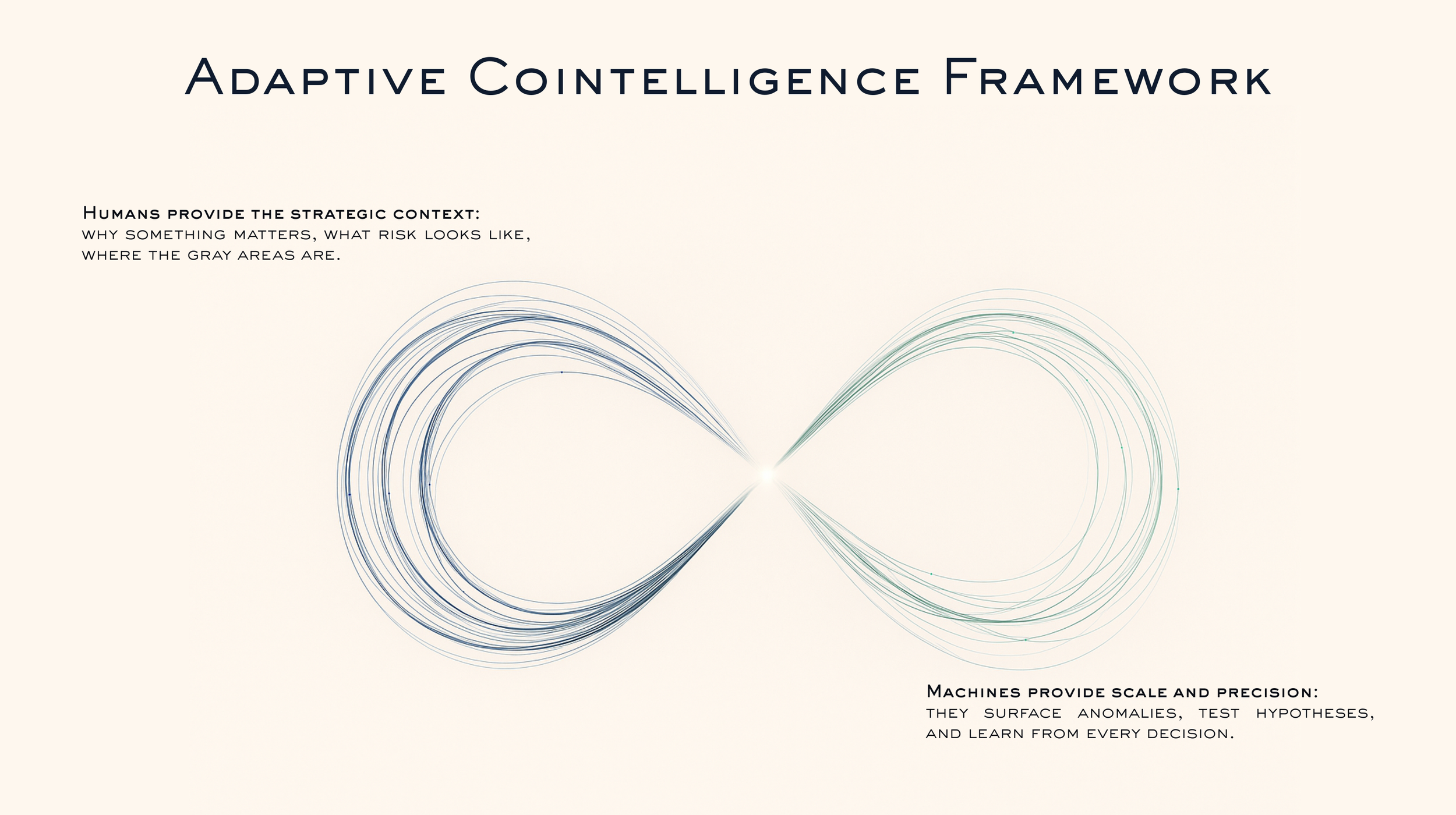

A new paradigm is taking shape — adaptive co-intelligence — systems where humans and machines share context, evidence, and judgment in a single loop so speed and safety compound together. Software stops thinking for practitioners and starts thinking with and learning from them.

From Automation to Amplification.

In health, finance, and insurance the AI story has mostly been about automation: faster underwriting, automated claims, instant medical coding. Useful, but brittle. Rules can’t anticipate every edge case. Models drift. Regulations change faster than static systems can keep up. The result is speed without confidence.

Enter amplification.

Amplification reframes AI as a force multiplier for judgement, a way to extend the reach, accuracy and consistency of human decisions. With adaptive cointelligent systems, humans define the boundaries and values and machines continuously interpret and enforce them. It’s the difference between building software that executes decisions and building software that helps teams make better ones.

Why now.

Four forces are colliding to make adaptive cointelligence the cost of entry:

Regulatory velocity. Laws and standards evolve quickly. Co-intelligent products absorb new rules as code and help teams test workflows without full rebuilds.

Edge-case complexity. Real decisions rarely fit neat rules. Human-in-the-loop design improves precision over time instead of failing at the margins.

Trust & transparency. Black-box automation doesn’t fly when choices affect health or capital. Buyers expect systems that can show their work.

User expectations. Professionals want AI that extends their judgment, not replaces it. Products that learn from interaction and adapt become indispensable.

What adaptive cointelligent software looks like.

Think of a continuous loop between expertise and intelligence.

The result is a system grounded in human intent and powered by machines that interpret, enforce, and improve.

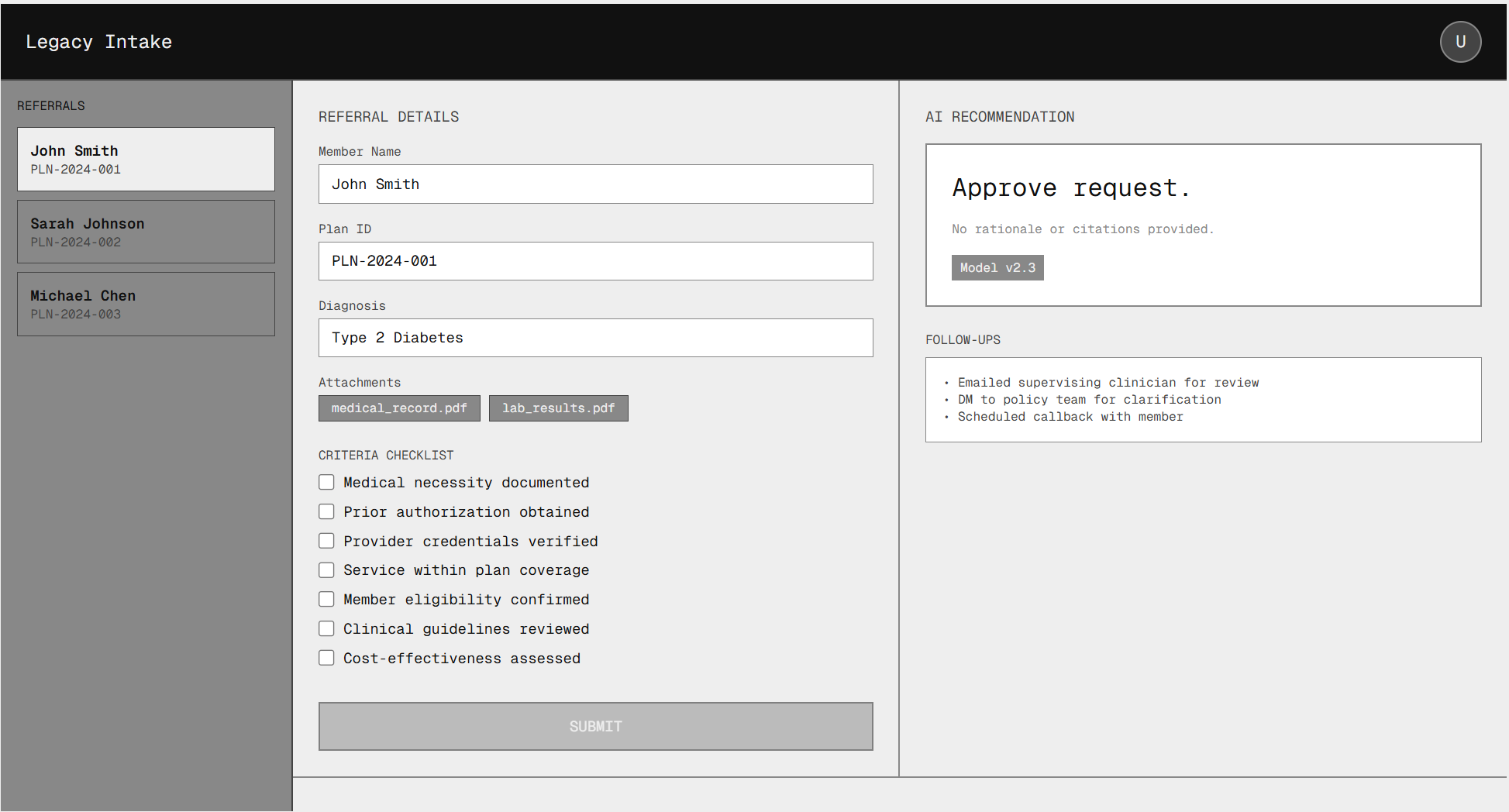

A simple before-and-after makes it concrete.

Without embedded co-intelligence:

At morning intake, a care manager reviews a referral that could go either way. The legacy tool offers checkboxes and a binary submit. An “AI assistant” plugin produces a confident recommendation without reasoning or citations. The tool is fast but opaque, so the manager double-checks offline, asks a colleague, and delays the decision. Throughput suffers, and audit risk grows because the rationale lives in email.

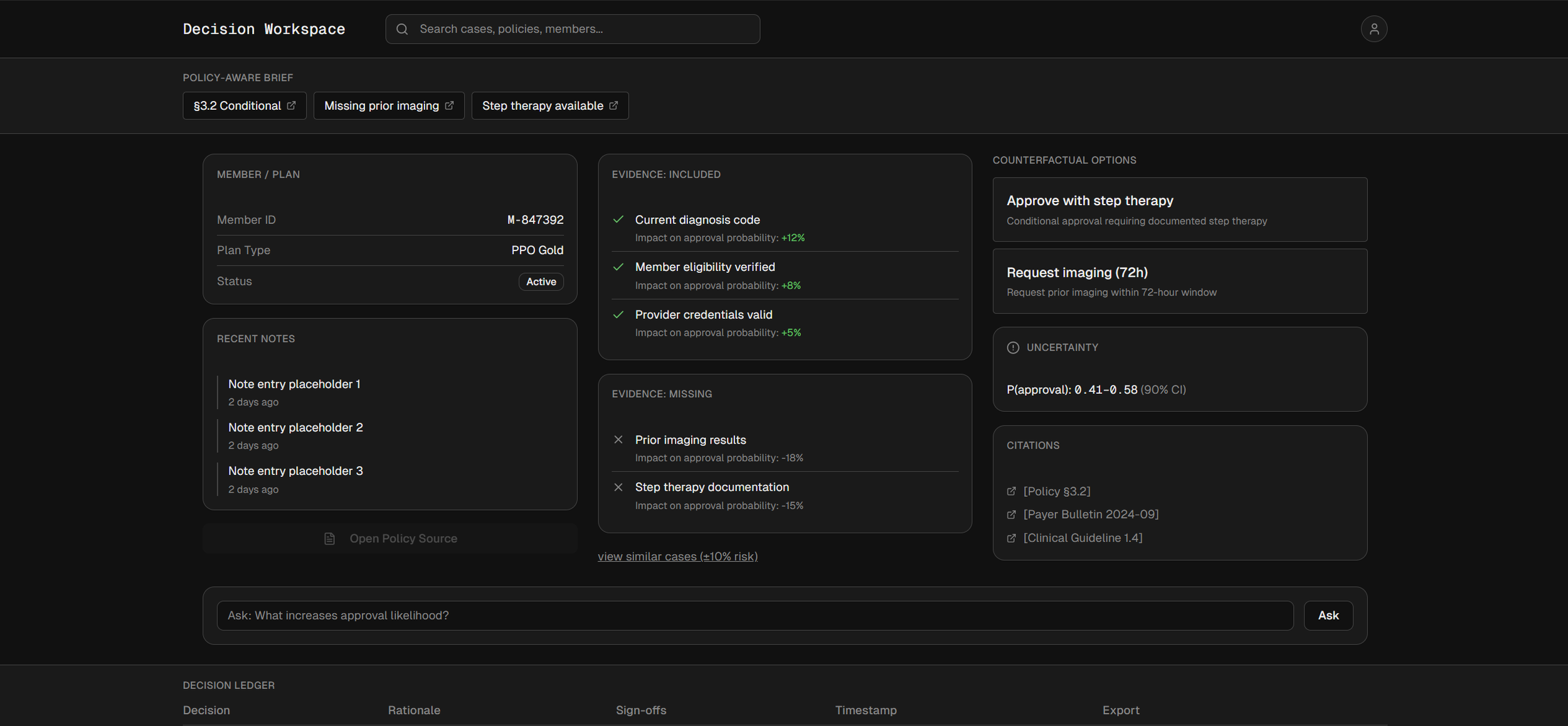

With embedded co-intelligence:

The case opens with a policy-aware brief: “Coverage conditional under §3.2; missing prior imaging; step therapy available.” Each clause links to source policy and similar past cases. An evidence pane shows included and missing artifacts, with expected impact on approval probability. A counterfactual panel offers two viable paths: approve with step therapy or request imaging within 72 hours. The manager asks, “What increases approval likelihood?” The system answers with data, uncertainty bounds, and references and then records the decision, rationale, and sign-offs in an exportable ledger.

The point is to remove friction from judgement, not judgment itself. By exposing constraints, evidence, uncertainty, and options in the same frame, adaptive cointelligence turns high-stakes ambiguity into a navigable map.

Three mechanics power the experience:

A policy engine translates statutes and contracts into testable rules.

An evidence graph ties each claim to sources and timestamps.

A reasoning loop generates options and quantifies trade-offs.

Design shifts follow: explanations are interactive (“show me prior cases within ±10% risk”), uncertainty is expressed in business units, and the final decision is a ledger, with citations that can be exported to auditors or patients. Overrides are embraced as learning signals to update policy or prompts.

The payoff.

What changes for the organization? Throughput improves because fewer cases bounce. Quality improves as decisions converge on consistent patterns with quantified uncertainty. Audits become simpler because the why is captured at the moment of decision. Finance notices: rework falls, net dollar retention ticks up as adjacent teams adopt the same decision surfaces, and CAC payback improves when onboarding drops from weeks of training to guided flows that explain themselves.

The deeper change is cultural. Teams stop arguing about whose tool is smarter and start asking which constraints should be explicit, which defaults are safe, and where a human must always sign.

What this means for founders and product leaders.

In regulated markets, judgment quality becomes both your product health metric and your sales proof. If your product raises the quality of decisions at scale, you’ll ship faster, procurement accelerates, and expansion gets easier.